The Big 4 Are Selling “AI Audits” — But What Are You Really Getting?

Every Big 4 consultancy—Deloitte, PwC, EY, and KPMG—now offers something called a “Big 4 AI audit.” They’re pitching it as the next evolution of trust: AI assurance, responsible AI frameworks, algorithmic transparency. It sounds sophisticated, cutting-edge, and exactly what modern enterprises need to navigate the complexities of artificial intelligence deployment.

But here’s the insider truth: most of these “AI audits” aren’t really audits at all.

At AIAuditExperts.com, we’ve been on both sides of the table. Many of our consultants once worked inside the Big 4 AI audits—designing systems, implementing enterprise solutions, and writing the very playbooks that define how these audits are sold. We’ve sat in the strategy meetings, delivered the presentations, and witnessed firsthand how these services are packaged and delivered to clients across industries.

So, when we tell you that the Big 4’s AI audits are more branding than breakthrough, we say it with authority. We’re not outside critics throwing stones—we’re former insiders who understand exactly what’s being delivered, what’s being promised, and where the critical gaps exist.

Let’s unpack what’s actually going on behind the glossy marketing materials and why our A2A methodology is redefining what a real AI audit looks like in today’s rapidly evolving technological landscape.

1. “AI Audits and More”: What the Big 4 Are Actually Selling

If you search for “Big 4 AI audits and more,” you’ll find an avalanche of shiny press releases and carefully crafted marketing collateral. Each firm has branded their offering differently: Deloitte’s “Trustworthy AI” framework, PwC’s “Responsible AI Toolkit,” EY’s “AI Assurance Model,” and KPMG’s “Lighthouse AI Practice.” They sound futuristic, comprehensive, and exactly what boardrooms want to hear when discussing AI governance.

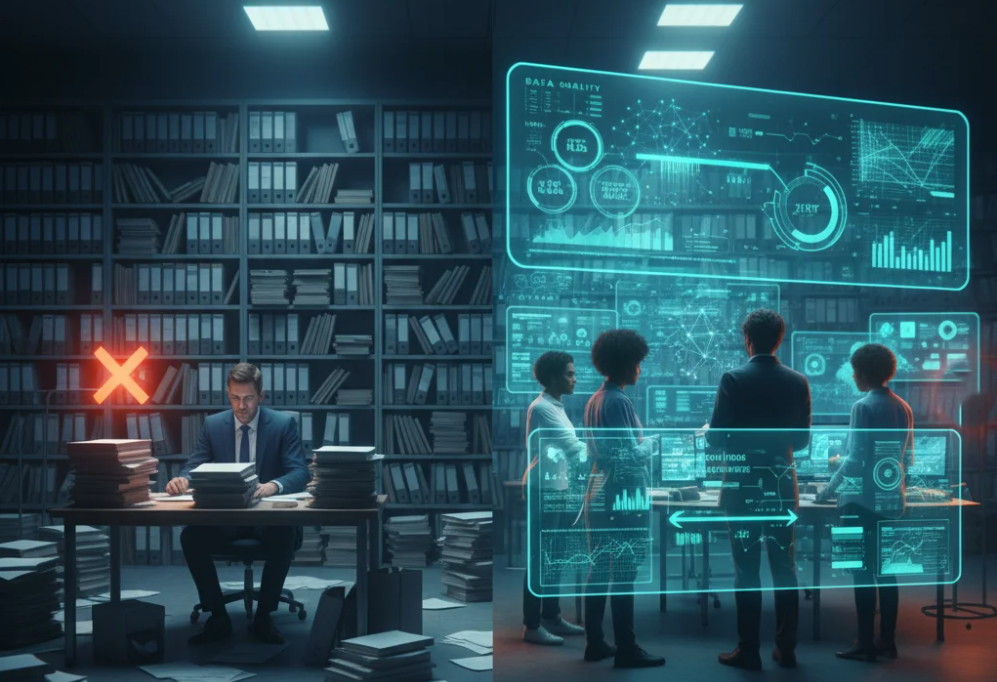

But look closer and you’ll see the same structure repeated across all four firms—policy reviews, process documentation, governance checklists, and stakeholder interviews. These are familiar tools borrowed from traditional audit methodologies, simply repackaged with “AI” in the title.

What’s missing? The actual inspection of the AI itself.

Typical Big 4 AI audit deliverables include interviews with stakeholders to understand AI implementation strategies, data privacy and policy assessments to ensure regulatory compliance, ethical and compliance frameworks that map to existing governance structures, and high-level risk mapping that identifies potential vulnerabilities at the organizational level.

These components are useful, sure—but these are AI audits around AI, not of AI. The algorithm itself, the training data quality, and the model’s actual performance rarely get examined in depth. It’s like hiring a mechanic to inspect your car, only to have them review the owner’s manual and interview the driver without ever opening the hood.

That’s why we created the A2A (Audit-to-Action) methodology. We test what the model does, not what the paperwork says. We believe that if you’re auditing a self-driving car, you don’t just check the manual—you take it for a drive, test its responses under various conditions, and measure its performance against real-world scenarios.

2. The Meaning Behind “Big 4 AI Audits”: Definition vs. Reality

The web is full of searches for “big 4 ai audits meaning,” “big 4 ai audits definition,” and even “big 4 ai audits synonym.” That’s because nobody really knows what these audits include—not even the firms offering them. The terminology varies wildly depending on which presentation deck you’re reading or which partner you’re speaking with.

Each Big 4 brand defines AI auditing differently. Some call it AI assurance—meaning a high-level risk review that provides stakeholders with confidence in governance processes. Others brand it as responsible AI assessment—essentially an ethics workshop that explores the philosophical implications of AI deployment. A few combine it with data governance audits—still largely documentation-based exercises that focus on policies rather than performance.

All of these approaches are valuable in their own right, but they’re fundamentally incomplete. They address the surrounding ecosystem of AI implementation without examining the core technology itself. This is why an article like AI Audits Explained and What the Big 4 Get Wrong is so important: it clarifies what a real AI audit should include and separates marketing hype from practical risk assessment.

At AIAuditExperts.com, we believe a real AI audit should be technical, behavioral, and operational. A proper audit includes data integrity testing to verify that your training data is complete, unbiased, and valid. It requires model validation to confirm that the algorithm performs consistently and fairly across different scenarios and demographic groups. It demands operational usage review to assess whether humans are using AI responsibly within established workflows. Finally, it needs ROI and risk correlation analysis to determine whether AI is genuinely improving your bottom line or simply burning through budget without delivering measurable value.

That’s what A2A delivers—a framework born from experience inside Big 4 delivery teams, then re-engineered to actually work in the real world. When you understand the true meaning of an AI audit, you realize the Big 4’s version is just the introductory slide to a much longer and more complex presentation.

3. “AI Audits and Inspections”: Why the Big 4 Approach Stops Short

Another trending phrase in the industry is “big 4 ai audits and inspections.” The terminology implies hands-on investigation, technical deep dives, and rigorous testing—but the reality is usually desk-based analysis at best.

A Big 4 “inspection” often involves reviewing documentation rather than examining data. They’ll meticulously check compliance policies, conduct extensive interviews with managers and stakeholders, and produce an immaculate PowerPoint deck summarizing the findings in executive-friendly language. The deliverable looks impressive, complete with frameworks, maturity models, and roadmaps for improvement.

But the algorithm? Untouched. The training data? Unexamined. The model’s actual decision-making process? Treated as a black box that doesn’t require opening.

At AIAuditExperts.com, we’ve seen this pattern repeatedly—because we helped design those legacy processes when we were on the inside. Back then, AI systems were just emerging, and most audit frameworks were retrofitted from finance and cybersecurity playbooks. The methodologies that worked for reviewing financial controls or IT infrastructure were simply adapted with minimal modification for AI systems, even though the technology requires fundamentally different approaches.

That’s why Big 4 inspections look impressive but miss the engine under the hood. They’re built on frameworks designed for a different era and a different type of technology.

Our A2A inspections dive straight into the technical core: algorithmic bias and drift analysis to detect when models begin performing differently over time, data lineage and versioning to track how training data evolves and impacts outcomes, model explainability testing to understand how decisions are actually being made, and human-in-the-loop interaction reviews to assess how people and AI systems collaborate in practice.

It’s not about producing “trust statements” or confidence declarations. It’s about generating proof through rigorous testing and evidence-based analysis. That’s the fundamental shift from compliance to confidence—and it’s what clients are increasingly demanding as AI becomes more central to business operations.

4. Big 4 AI Audits in Healthcare: A Case of Policy Over Patients

The healthcare sector is one of the Big 4’s favorite showcase industries. Every major firm now advertises its “AI audits in healthcare” as a comprehensive solution for patient trust and clinical integrity. They understand that healthcare represents a perfect storm of AI opportunity and ethical responsibility, making it an ideal market for their services.

But the truth? Most of those engagements are documentation-heavy and model-light.

We’ve been in those hospitals, sat in those steering meetings, and read those glossy “AI assurance” decks. They look impressive—filled with flowcharts showing governance structures, frameworks mapping to regulatory requirements, and comprehensive summaries of compliance obligations—but rarely include a single test of the algorithm’s actual medical performance.

At AIAuditExperts.com, we built A2A to flip that script entirely. In a typical healthcare AI audit, we simulate thousands of patient profiles to test model fairness across diverse populations. We measure false positives and negatives across different demographic groups to identify disparities in diagnostic accuracy. We validate staff training programs and AI override procedures to ensure clinicians can appropriately intervene when AI recommendations seem questionable. We review ethical risk and regulatory alignment side-by-side, ensuring that both moral obligations and legal requirements are met simultaneously.

That’s what an AI inspection should look like—practical, evidence-based, and clinically grounded. It requires understanding both the technology and the medical context in which it operates.

Because in healthcare, missing a model error isn’t merely a spreadsheet issue or a compliance violation—it’s a human one with potentially life-threatening consequences. When an AI system misdiagnoses a condition or recommends inappropriate treatment, real patients suffer real harm. That’s why superficial policy reviews are fundamentally insufficient in this context.

5. Big 4 AI Audits of Employee Systems: The Fairness Facade

Another booming market for “Big 4 AI audits” is HR and people analytics. You’ll see endless case studies about ethical hiring practices and AI fairness in recruitment. The firms have recognized that employee-facing AI systems represent both tremendous opportunity and significant reputational risk for their clients.

But again—we’ve delivered those audits inside Big 4 teams. We know the internal playbook intimately: check GDPR compliance and data protection requirements, confirm vendor bias statements and third-party assessments, produce “Responsible AI” summary slides for executive presentation. Check, check, check.

What’s rarely tested is the system’s real-world fairness in actual hiring or evaluation scenarios.

We’ve discovered critical gaps that typical Big 4 audits miss entirely. AI recruitment filters that quietly reject minority candidates based on proxy variables that correlate with protected characteristics. Sentiment analysis tools that consistently misread tone from non-native English speakers, penalizing perfectly qualified candidates. Performance scoring AI that inadvertently punishes remote workers or neurodiverse employees whose working styles differ from the training data norm.

At AIAuditExperts.com, our A2A fairness track goes beyond ethics to evidence. We don’t simply review policies about fairness—we test for it directly. We recreate hiring or scoring scenarios with controlled variables, test them using rigorous statistical methods, and document measurable bias or misalignment with organizational values.

Because “fairness” isn’t a philosophy to be debated in conference rooms—it’s a dataset to be analyzed, tested, and improved. Real fairness requires real testing, not theoretical frameworks.

6. Big 4 AI Audits Examples: When Branding Outruns Capability

The Big 4 love to publish “AI audits examples” to demonstrate their progress and capabilities—from automated underwriting in insurance to smart logistics optimization in supply chains. These case studies are carefully crafted to showcase innovation and thought leadership.

But look closer and most examples are proof-of-concepts or pilot projects, not deep audits of production systems. They represent what’s possible rather than what’s actually being delivered to most clients.

We’re not saying these examples are bad or misleading—we helped design some of those early pilots when we were still inside large consultancies. But as AI evolved rapidly, those frameworks didn’t keep pace. The methodologies that worked for first-generation AI systems in controlled environments don’t scale to the complex, continuously-learning systems that enterprises deploy today.

Now, the world needs continuous AI auditing—not one-off compliance snapshots taken annually or quarterly. AI systems change constantly as they ingest new data and adapt to evolving patterns. An audit that examines a model’s performance in January tells you almost nothing about how it’s performing in June.

That’s why AIAuditExperts.com built A2A as a living process rather than a static assessment: real-time bias tracking that identifies drift as it occurs, model drift alerts that notify stakeholders when performance deviates from baseline, and ongoing operational scoring that measures AI effectiveness continuously.

Where Big 4 firms deliver quarterly slides summarizing historical performance, we deliver daily insights that enable proactive management. It’s the difference between a rearview mirror and a forward-looking radar system.

7. The Insider Advantage: We Know the Big 4 Playbook

We don’t just critique the Big 4 from the outside—we understand them intimately from years of internal experience. Many of our consultants spent significant portions of their careers inside Big 4 and Tier 1 consulting firms, leading digital transformation and AI implementation projects across the UK, Europe, the Middle East, and Asia.

We learned how their teams are structured and how different practice areas collaborate or compete internally. We understand how projects are priced and scoped, often prioritizing factors like staff utilization and margin over optimal client outcomes. We’ve seen how deliverables are shaped by internal templates and review processes that prioritize consistency over innovation.

That’s why AI Audit Experts exists—because we saw brilliant, capable people trapped inside outdated systems and frameworks. We witnessed talented technologists constrained by methodologies designed for a previous technological era. We experienced the frustration of knowing what clients truly needed while being limited by what the firm’s standard offerings provided.

We took that institutional knowledge and built something more agile, transparent, and genuinely technical. The A2A framework is our answer to the Big 4’s bureaucracy, offering faster setup without lengthy scoping and contracting processes, deeper data inspection that examines algorithms rather than just policies, clearer ROI mapping that connects AI performance to business outcomes, and continuous validation that keeps pace with evolving AI systems.

We respect the Big 4 legacy and the value they’ve delivered to clients over decades. But we also recognize that the future of AI assurance won’t be built in boardrooms through PowerPoint presentations. It’ll be built in the data, through rigorous testing, and via methodologies specifically designed for AI’s unique characteristics.

8. Why A2A Is Redefining “AI Audit” for the Real World

How AI Audit Experts Is Redefining AI Assurance shows that a true AI audit is not about compliance documents that sit in digital folders unread or ethics checklists that provide superficial reassurance. It’s about verifying how an AI system behaves in reality—examining its actual decision-making patterns, testing its performance across diverse scenarios, and determining whether that behavior is accurate, fair, and valuable to the organization.

That’s what A2A (Audit-to-Action) delivers in practice: real testing using controlled experiments and statistical analysis, not theoretical reviews based on documentation. Actionable insights that enable immediate improvements, not executive slides that simply summarize the current state. Continuous accountability through ongoing monitoring, not annual compliance exercises that quickly become outdated.

It’s auditing that connects data quality to decision quality, model performance to financial performance, and technical risk to business ROI. Every finding in an A2A audit links directly to a specific action that can improve outcomes, reduce risk, or enhance efficiency.

If you want an audit that gives you answers rather than adjectives, evidence rather than assurances, and action items rather than awareness—you’re in the right place.

Get the AI Audit That Actually Works

The Big 4 can give you assurance through their established frameworks and prestigious brand names. We give you action through rigorous testing and evidence-based recommendations.

If your business uses AI in healthcare, HR, finance, or operations, don’t settle for another compliance-only review that checks boxes without examining what’s actually happening inside your AI systems. Don’t accept deliverables that look impressive in boardroom presentations but fail to identify the risks lurking in your algorithms and data.

Get a true AI audit that inspects your models thoroughly, examines your data quality rigorously, and evaluates your decision-making processes comprehensively—from the inside out.

Download our free AI Audit Reality Checklist—and discover what your last consultancy didn’t test, didn’t measure, and didn’t report. Use it to evaluate your current AI governance and identify gaps that may be exposing your organization to risk.

Or book a Discovery Audit at AIAuditExperts.com—and see the A2A framework in action. Experience firsthand how technical AI auditing differs from traditional compliance reviews.

We built the methodology the Big 4 wish they had—one designed specifically for AI rather than retrofitted from legacy frameworks. Now, we’re using it to make AI audits actually mean something tangible, measurable, and valuable.

The era of accepting vague assurances about AI trustworthiness is over. The future belongs to organizations that demand proof, not promises—evidence, not buzzwords. Welcome to AI auditing that actually works.