Type “Big 4 AI audits meaning” or “Big 4 AI audits definition” into Google and you’ll find yourself drowning in a sea of corporate jargon. Terms like “AI assurance,” “responsible AI,” and “algorithmic trust frameworks” dominate the search results, yet clarity remains elusive.

Here’s an uncomfortable truth: ask ten consultants from Deloitte, PwC, EY, or KPMG what an AI audit actually is, and you’ll likely get twelve different answers. This isn’t an exaggeration—it’s an observation born from experience.

Many of us at AIAuditExperts.com used to work inside the Big 4. We wrote the presentation decks, developed the frameworks, and crafted the RFP responses that promised comprehensive AI auditing services. We delivered those projects to major corporations and government agencies. But we also witnessed something troubling: a significant gap between what clients believed they were purchasing and what they actually received.

That gap—between expectation and reality, between marketing promises and delivered value—is precisely why we built the A2A (Audit-to-Action) methodology. We saw the limitations of the traditional Big 4 approach from the inside, and we knew there had to be a better way.

Naturally, this led us to How AI Audit Experts Is Redefining AI Assurance, creating a process that focuses on actionable insights rather than just paperwork.

So let’s cut through the marketing fog and explain what an AI audit really is, how the Big 4 typically approach it, and why their version stops halfway to where organizations actually need to go.

1. How the Big 4 Define AI Audits — and Why It Doesn’t Work

Each of the Big 4 accounting and consulting firms has developed its own branded version of essentially the same service offering. Deloitte calls their approach Trustworthy AI. PwC brands their services as Responsible AI. EY sells AI Assurance to their clients. KPMG wraps their offerings under the banner of Lighthouse AI Governance.

These names sound impressive and comprehensive. But strip away the shiny branding and polished marketing materials, and you’ll discover one consistent pattern across all four firms: their AI audits primarily consist of policy reviews, risk workshops, and compliance checklists.

This approach isn’t accidental—it’s structural. The Big 4 firms built their reputations on financial auditing, not on engineering or data science. Their entire organizational model, from billing structures to staffing patterns, rewards documentation and process verification, not technical experimentation or empirical testing.

A typical “AI audit” engagement delivered by a Big 4 firm includes tasks like these: reviewing AI policy statements, interviewing data-governance officers, and mapping identified risks against ESG frameworks or ISO standards. All of these activities have value, certainly. But here’s what they don’t do: none of them actually proves that the AI model works as intended.

When we were still working inside these firms, we saw firsthand that most “AI assurance” projects were fundamentally designed to tick regulatory boxes rather than to test algorithms. The deliverables focused on demonstrating compliance with governance frameworks, not on validating technical performance.

This is like checking whether a pilot has read the flight manual without ever verifying if the plane flies straight. The paperwork might be perfect, but the fundamental question—does this system actually work?—remains unanswered.

2. The Real Definition of an AI Audit

At AIAuditExperts.com, we believe in clarity over clever branding. Here’s our straightforward definition:

An AI audit is an independent, technical, and operational evaluation of how artificial intelligence systems behave, impact outcomes, and align with ethical and regulatory standards.

Notice what’s emphasized here: behavior, impact, and outcomes. An AI audit isn’t a workshop series. It isn’t a whitepaper or policy document. It’s an inspection—a rigorous examination of what goes into the system (data in) and what comes out of it (decisions out).

The Four Pillars of a Real AI Audit (A2A Framework)

Our A2A framework rests on four essential pillars:

Data Integrity – Is your training data clean, unbiased, and complete? We examine the datasets that feed your AI systems, looking for gaps, errors, and potential sources of bias that could compromise model performance.

Model Validation – Does the algorithm perform reliably under real-world conditions? We test the model’s actual behavior, not just its theoretical capabilities, using scenarios that reflect genuine operational environments.

Operational Adoption – Are employees using the AI system responsibly and effectively? The best algorithm in the world delivers no value if humans misuse it, ignore its outputs, or fail to integrate it properly into workflows.

ROI & Risk Correlation – Does the AI system save money, reduce risk, or add measurable value to the organization? We connect technical performance to business outcomes, ensuring that AI investments deliver tangible returns.

This operational definition—grounded in empirical testing and business impact—is what’s missing from most Big 4 approaches.

Consider this comparison:

| Aspect | Big 4 Version | AIAuditExperts Version |

| Focus | Governance & compliance | Behavior & performance |

| Deliverable | Policy report | Tested model report |

| Team | Risk managers + consultants | Data scientists + auditors |

| Outcome | Assurance statement | Action plan + ROI metrics |

When clients see this table during our discovery conversations, the difference becomes immediately clear. One approach audits paperwork; the other audits performance.

3. Meaning vs Reality — Why Most AI Audits Are Still Slideware

Let’s walk through what typically happens behind closed doors when an organization engages a Big 4 firm for an AI audit.

A client recognizes the need for AI auditing—perhaps because regulators are asking questions, or investors want assurance, or the board is nervous about liability. They reach out to one of the Big 4 firms. The proposal arrives: twelve weeks of work, a price tag of £250,000, and a promise of comprehensive assessment.

The engagement kicks off. Consultants conduct interviews. Workshops are scheduled. Documents are reviewed. And at the end of the project, the client receives a 90-page slide deck filled with governance matrices, ethical-risk rankings, and colorful “AI principles heatmaps.”

What you won’t find in that deck? Actual model testing. Real data analysis. Empirical evidence of how the AI system performs.

Why this gap? Because the delivery teams on these projects rarely include data scientists or machine learning engineers. When we were inside those projects, we saw repeatedly that any technical analytics work was either outsourced to external specialists, quietly scaled back, or simply skipped entirely.

The result is that clients walk away with beautiful slides—professionally designed, impressively comprehensive in scope—but zero technical assurance about their AI systems.

Here’s a concrete example that illustrates the difference. Imagine a hospital that has installed an AI-powered diagnostic tool to help physicians identify diseases from medical imaging.

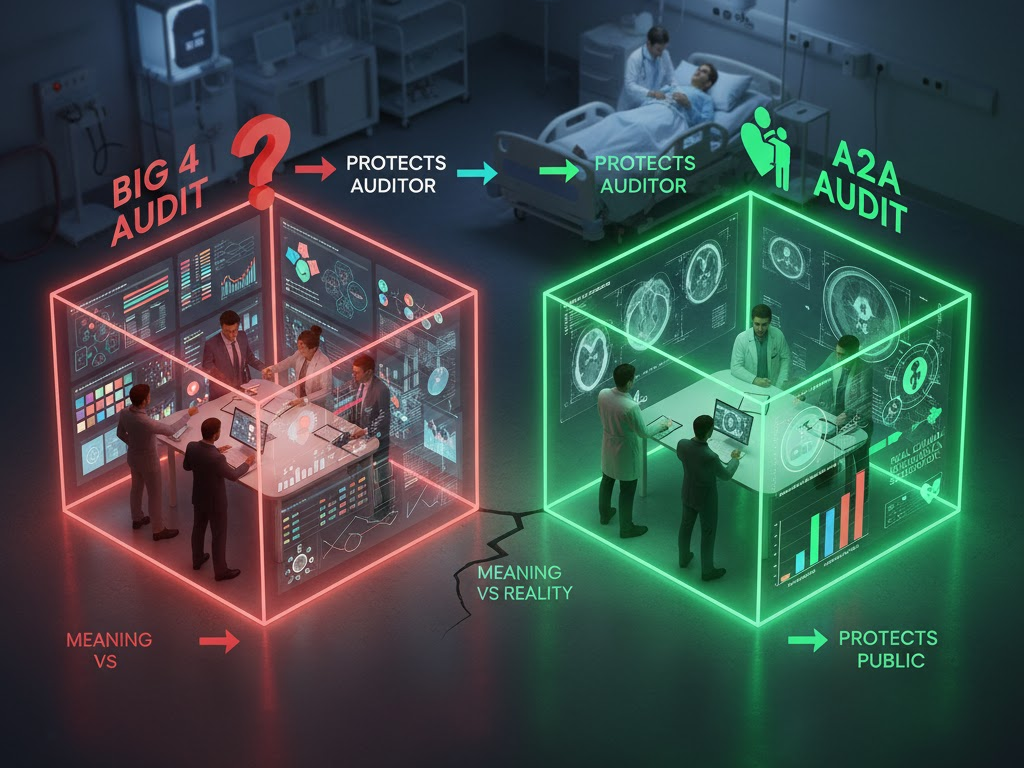

The Big 4 audit approach would verify that the hospital has a “bias policy” on file, that there’s a governance committee overseeing AI deployment, and that staff have received training on ethical AI principles.

Our A2A audit would test whether the model actually misdiagnoses patients from minority backgrounds at higher rates than majority populations. We’d examine false-positive and false-negative rates across demographic groups. We’d simulate real clinical scenarios to see how the system performs under pressure.

See the difference? One approach protects the auditor from liability. The other protects the public from harm.

4. What Clients Think They’re Buying vs What They Need

Most organizations request an AI audit because external pressure compels them to. Regulators are demanding evidence of responsible AI practices. Investors want assurance that AI systems won’t create legal or reputational risks. Board members read headlines about algorithmic bias and want to know if their company is vulnerable.

These clients come to the table with reasonable expectations. They want concrete answers: Is our AI accurate? Is it fair? Does it comply with emerging regulations? Is it actually profitable, or are we throwing money at technology that doesn’t deliver value?

Instead of these clear answers, they typically receive what we call “assurance language”—carefully worded statements like “reasonable confidence in the governance framework” and “general alignment with ethical principles.” These phrases sound reassuring but provide no empirical basis for confidence.

The Reality Gap

| Category | What They Buy | What They Need |

| Governance | Policy review | Bias + accuracy testing |

| Risk | Qualitative matrix | Quantitative evidence |

| Outcome | Compliance badge | Continuous improvement plan |

This reality gap—between purchased services and actual needs—is why we rebuilt the AI audit approach from the ground up. Our A2A methodology was born from consultants who used to run Big 4 IT implementations and grew tired of delivering audits that never actually touched the technology being audited.

5. Why Definitions Matter — The Regulator’s Blind Spot

Governments and standards bodies worldwide are now scrambling to define what “AI audit” means in regulatory terms. The European Union’s AI Act, various state-level regulations in the United States, and emerging frameworks in Asia all reference AI auditing requirements.

But without clear, standardized definitions, Big 4 marketing departments fill the vacuum. Their branded frameworks and proprietary methodologies become the de facto standard—not because they’re technically superior, but because they’re well-marketed and widely distributed.

This creates a dangerous situation. Vague definitions enable false confidence. A company can publicly claim to have “passed an AI audit” when all that actually occurred was a documentation review by consultants who never examined the algorithm’s actual behavior.

This isn’t just semantically problematic—it’s genuinely dangerous. It gives boards and executives comfort without providing actual control. It creates the illusion of oversight while the underlying risks remain unaddressed.

We’re working to change this by promoting A2A as a working definition of what a complete AI audit should encompass—one that covers data integrity, model performance, and decision-chain validation, not just policy compliance.

6. From Definition to Delivery — Healthcare and HR Examples

Abstract definitions only matter if they translate into concrete practice. Let’s look at two real-world scenarios where the difference between Big 4 audits and A2A audits becomes crystal clear.

Healthcare AI Example

Big 4 healthcare AI audits typically focus on verifying governance structures: who signs off on AI deployment decisions, how patient data is handled throughout the system, which ethics boards approved the AI system, and what policies govern its use.

These are important questions, but they’re not sufficient. Our team takes the audit further by running controlled tests. We simulate diverse patient profiles, checking false-positive rates across demographic groups. We validate that the system doesn’t exhibit clinical bias that could lead to misdiagnosis or inappropriate treatment recommendations. Healthcare & HR under the microscope, this is what we mean by an AI inspection rather than an AI seminar. We’re not discussing the theoretical risks of bias—we’re measuring whether bias exists in practice.

HR Example

Large organizations increasingly rely on AI-powered hiring tools to screen candidates, rank applications, and even conduct preliminary interviews. Big 4 firms audit these systems primarily for privacy compliance, verifying that candidate data is handled according to GDPR or other privacy regulations.

We go beyond privacy to examine performance and fairness. We rebuild test environments using real CV data (appropriately anonymized), then check whether candidates from different backgrounds receive equal scores for equivalent qualifications. We examine whether the algorithm penalizes career gaps that disproportionately affect women or systematically downranks candidates from certain universities. Healthcare & HR under the microscope, this level of detailed testing ensures that AI systems in human resources are truly equitable.

These two examples illustrate why the definition of “AI audit” must include empirical testing and evidence-based validation. Without that technical component, AI auditing becomes what we call “trust theatre”—a performance of due diligence that provides comfort but not actual protection.

7. How A2A Bridges the Gap

The A2A framework was built by people who spent years inside the Big 4 machine and decided there had to be a better way. We understood the system from the inside—its strengths and its severe limitations.

We kept what worked: the discipline, the structured approach, the professional rigor that comes from decades of audit tradition. But we eliminated what didn’t work: the bureaucracy, the emphasis on documentation over outcomes, and the separation between technical teams and audit teams.

A2A Key Features

Continuous assurance instead of annual audits – AI systems change constantly as they’re retrained on new data and deployed in new contexts. Annual audits create blind spots where risks can emerge undetected. Our approach provides ongoing monitoring.

Real-time bias and drift tracking – We implement systems that alert organizations when AI models begin to drift from their baseline performance or when bias metrics start trending in concerning directions.

Cross-functional teams – Every A2A engagement combines consultants who understand business context and risk with data scientists who can actually examine model behavior. This integration is essential and non-negotiable.

ROI dashboards linking audit results to business value – We don’t just identify problems; we quantify their business impact and track whether corrective actions deliver measurable improvements.

The A2A framework represents the AI audit system we always wished we could sell when we were at the Big 4—one that actually serves client needs rather than fitting predetermined service offerings.

8. The Future Definition of AI Auditing

Tomorrow’s AI audits won’t be delivered as PDF reports that sit on shelves gathering dust. They’ll be live dashboards that provide real-time visibility into AI system performance, updated continuously as models evolve and new data flows through them.

They won’t be run by risk managers alone, working in isolation from the technical teams who build and maintain AI systems. Instead, they’ll be conducted by integrated teams that understand data science, business ethics, and operational workflows in equal measure.

The Big 4 firms can rebrand and rename their services as often as they like—Brilliant Branding or Hollow Buzz?—but they can’t easily outrun their fundamental structure. Their business model, organizational hierarchy, and talent pool all evolved for a different kind of auditing—one focused on financial statements and compliance documentation, not on algorithmic behavior and technical performance.

Meanwhile, specialist firms like AI Audit Experts are building the new standard from the ground up, unencumbered by legacy systems and traditional service-line boundaries.

We’re not anti-Big 4. Many of us built our careers there and learned invaluable lessons about professional rigor and client service. We’re just pro-truth. And the truth is that AI auditing requires a fundamentally different approach than traditional financial auditing—one that the Big 4’s current structure struggles to deliver.

Download Your Free Guide: The 5 Levels of AI Auditing

If you’re still unsure where your organization stands in terms of AI audit maturity, we’ve created a resource specifically to help you assess your current state.

Download “The 5 Levels of AI Auditing”—a quick self-assessment tool based on the A2A framework. This guide will help you determine whether your AI systems are being audited for paperwork or for actual performance.

Alternatively, book a Discovery Audit at AIAuditExperts.com and get a 30-minute review with our ex-Big 4 team. We’ll provide an honest assessment of your current AI audit approach and identify specific gaps that need addressing.

AIAuditExperts.com—we built the definition the Big 4 wish they had.